Welcome!

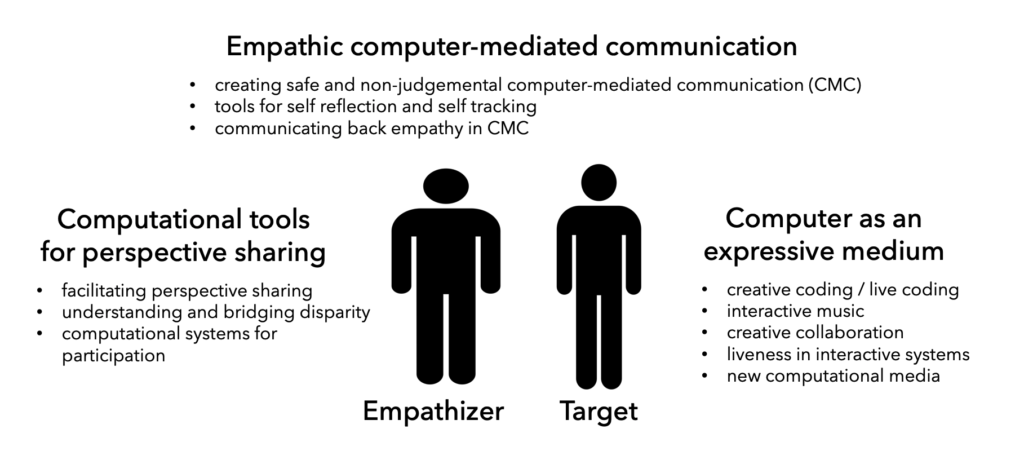

Welcome to the echolab website at Virginia Tech. Our mission is to explore and develop methods to foster empathic interactions among individuals using computational systems. Our research is grounded in the empathy framework depicted below. It focuses on three key themes: computational tools for perspective sharing by empathizers, computers as an expressive medium for targets, and the facilitation of empathic communication for both groups. Additionally, we are committed to studying how to create safe environments where targets can be safely vulnerable.

To Prospective Students

echolab is not recruiting any graduate students until further notice.

echolab is always looking for Ph.D. students who are interested in creating novel interactive systems. We are also looking for exceptional undergraduate students and master students to work with. Please apply to CS@VT.

If you are at Virginia Tech already and would like to do research in echolab, please fill out the following survey, and I will respond. https://forms.gle/gfsvheq8U6YNrGby7

We envision that echolab is…

- A platform where members develop into independent researchers and achieve their career goals.

- An opportunity for individuals to discover that giving—through teaching, helping, sharing, and collaborating—enhances their own growth and success.

- A source of social capital that enables learning from each other.

- A community where inclusion is prioritized, and members feel connected.

- A group that contributes to society and the world by producing and disseminating new knowledge.

- An environment where individuals can be more productive than in any other setting.

- A socio-technical system designed to scale the above visions.

Recent News

- (2025/11) We presented a paper at the ACM CSCW conference

- (2025/8) We presented a paper at the ACM ICER conference

- (2025/7) We presented a paper at the ACM CUI conference

- (2025/6) Sang Won Lee gave a keynote speech at the SIGCHI Korea Chapter Summer Event. (link)

- (2025/6) Sang Won Lee’s tenure was approved! (link)

- (2025/5) Sang will be spending his sabbatical year at NAVER AI Lab (Host: Dr. Young-ho Kim).

- (2025/5) Congratulations to Robin Lu and Rodney Okyere for successfully defending their M.S. Theses!

- (2025/3) One CHI Paper and two LBW were accepted. echolab is going to CHI!

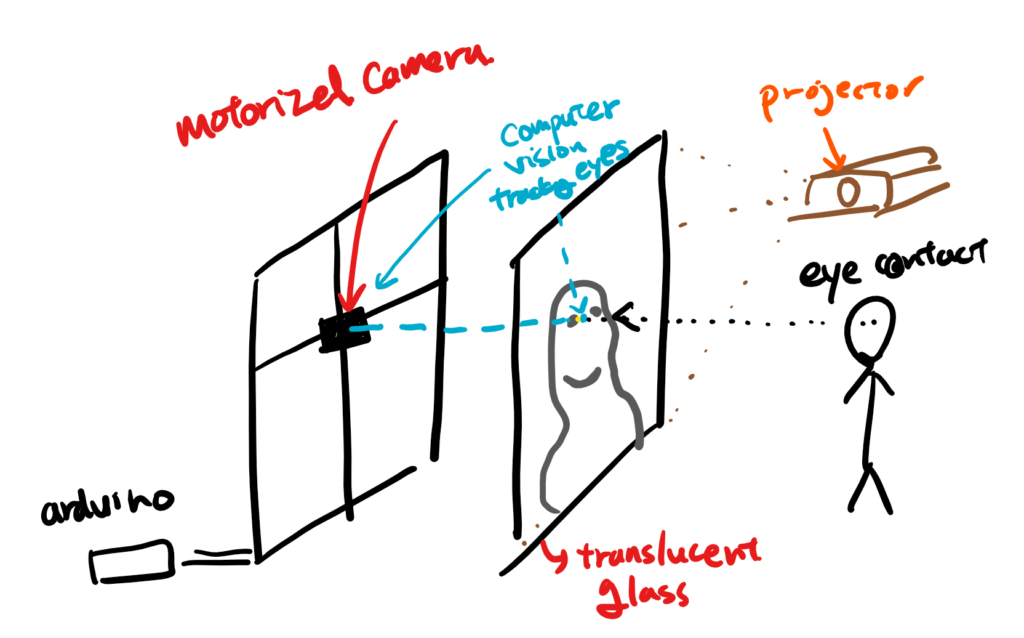

- Investigating the Effects of Simulated Eye Contact in Video Call Interviews (Full Paper)

- DUET: Exploring Event Visualizations on Timelines (LBW)

- “Look at My Planet!”: How Handheld Virtual Reality Shapes Informal Learning Experiences (LBW)

- (2024/12) Congratulations to Andy Luu and Sulakna Karunaratna for successfully defending their M.S. Theses!

- (2024/11) Sang Won Lee was invited to give a talk at CITIC at the University of Costa Rica (link)

- (2024/11) One SIGCSE poster was accepted.

- Understanding the Effects of Integrating Music Programming and Web Development in a Summer Camp for High School Students

- (2024/9) Congratulations to Donghan Hu for successfully defending the dissertation.

- (2024/9) Congratulations to Donghan Hu for getting a post-doc position at New York University. Looking forward to his new adventure!

- (2024/8) Sang Won Lee was interviewed at WDBJ7 TV for their recent cybergrooming project. (link)

News (roughly) older than a year will be available here.

Research Highlights

(ongoing) Facilitating socially constructed learning through a shared, mobile-based virtual reality platform in informal learning settings

TBD

TBD