Vision

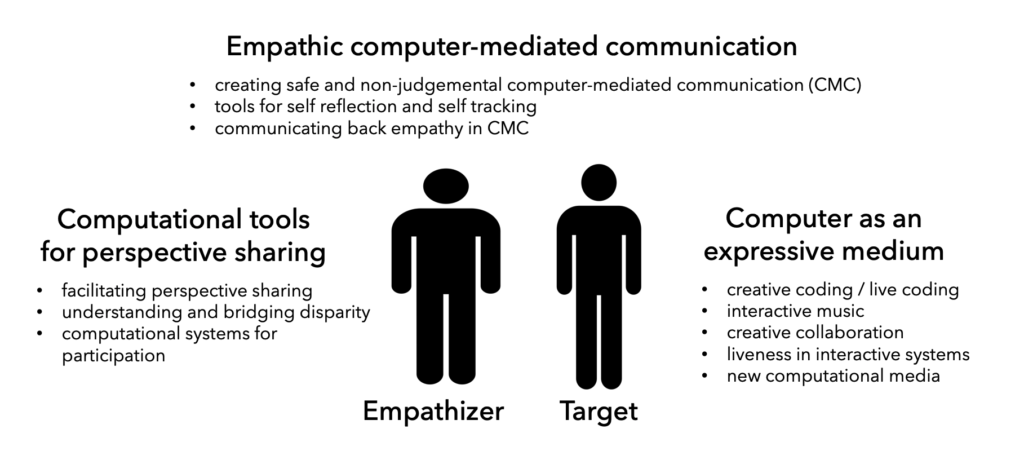

Welcome to the website of echolab at Virginia Tech. Our mission is to understand and create ways to foster empathic interaction among people using computational systems. Our research is based on the framework of empathy depicted below and centered around three themes: computational tools for perspective sharing for empathizers, computer as an expressive medium for targets (or empathizees), and facilitation of empathic communication for both groups.

To Prospective Students

echolab is always looking for Ph.D. students who are interested in creating novel interactive systems. We are also looking for exceptional undergraduate students and master students to work with. Please apply to CS@VT.

If you are in Virginia Tech already and would like to do research in Echolab, please fill out the following survey and I will respond. https://forms.gle/gfsvheq8U6YNrGby7

Recent News

- (2024/6)

SHARP received an Honorable Mention Award ACM C&C 2024.

SHARP received an Honorable Mention Award ACM C&C 2024. - (2024/5) One more CSCW 2024 paper was accepted.

- Investigating Characteristics of Media Recommendation Solicitation in r/ifyoulikeblank

- (2024/5) Congratulations to Daniel Vargas, Yi Lu, and Emily Altland for passing their master’s thesis exam!

- (2024/5) Many Echolab students will attend CHI workshops with four workshop papers!

- (2024/5) One C&C 2024 paper was accepted!

- SHARP: Exploring Version Control Systems in Live Coding Music

- (2024/4) Sang Won Lee was awarded a CHCI planning grant with collaborators to study how generative AI can affect the values of expertise. (link)

- (2024/3) Sang Won Lee was awarded SaTC NSF program grant as a Co-PI. A collaborative team of researchers will focus on empowering adolescents to be resilient to cybergrooming (link)

- (2024/2) One CHI Paper was accepted.

- Exploring the Effectiveness of Time-lapse Screen Recording for Self-Reflection in Work Context

- (2024/2) Sang gave a seminar at the GVU Lunch Lectures at Georgia Tech. (Talk Video)

- (2024/2) Sang gave a seminar at CS Colloquium Talks, University of Pittsburgh (Talk Video)

- (2024/2) Sang gave a seminar at the Human-Computer Interaction Institute at Carnegie Mellon University.

- (2024/1) Two CSCW 2024 papers were accepted

- Understanding Multi-user, Handheld Mixed Reality for Group-based MR game

- Understanding the Relationship Between Social Identity and Self-Expression Through Animated Gifs on Social Media

- (2023/11) Sang was invited to give a talk at HCI@KAIST Fall Colloquium 2023

- (2023/11) Sang was invited to give a guest lecture at Prof. Kenneth Huang’s class, Crowdsourcing & Crowd-AI Systems, Penn State University

- (2023/8) Two posters were accepted to ACM UIST 2023

- TaleMate: Collaborating with Voice Agents for Parent-Child Joint Reading Experiences

- Context-Aware Sit-Stand Desk for Promoting Healthy and Productive Behaviors

- (2023/7) One paper was accepted to VL/HCC 2023

- Octave: an End-user Programming Environment for Analysis of Spatiotemporal Data for Construction Students

- (2023/6) Congratulations to Md Momen Bhuiyan for successfully defending the dissertation.

- (2023/6) Congratulations to Md Momen Bhuiyan for getting a tenure-track faculty position at the University of Minnesota at Duluth. Super excited about his future research

- (2023/5) Congrats to Marx Boyuan Wang for successfully defending the Master’s thesis. He will continue HCI research at iSchool@UW.

- (2023/5) Sang Won Lee gave a seminar as part of NAVER Tech Talk series at NAVER AI Lab, Korea.

- (2023/5) Congrats to Danny Manesh, who won the GTA Award 2023

- (2023/5) Congrats to Teresa Thomas, who won the David Heilman Research Award 2023

More news items are available here.

Research Highlights

(ongoing) Facilitating socially constructed learning through a shared, mobile-based virtual reality platform in informal learning settings

TBD

TBD